EZThrottle: Making Failure Boring Again

Modern software doesn't fail in exciting ways.

It fails with 429s, timeouts, and regions quietly going dark.

And somehow we've all accepted that this means:

- retry loops everywhere

- exponential backoff copy-pasted into every codebase

- someone eventually waking up at 2 a.m.

I built EZThrottle because I got tired of pretending this was normal.

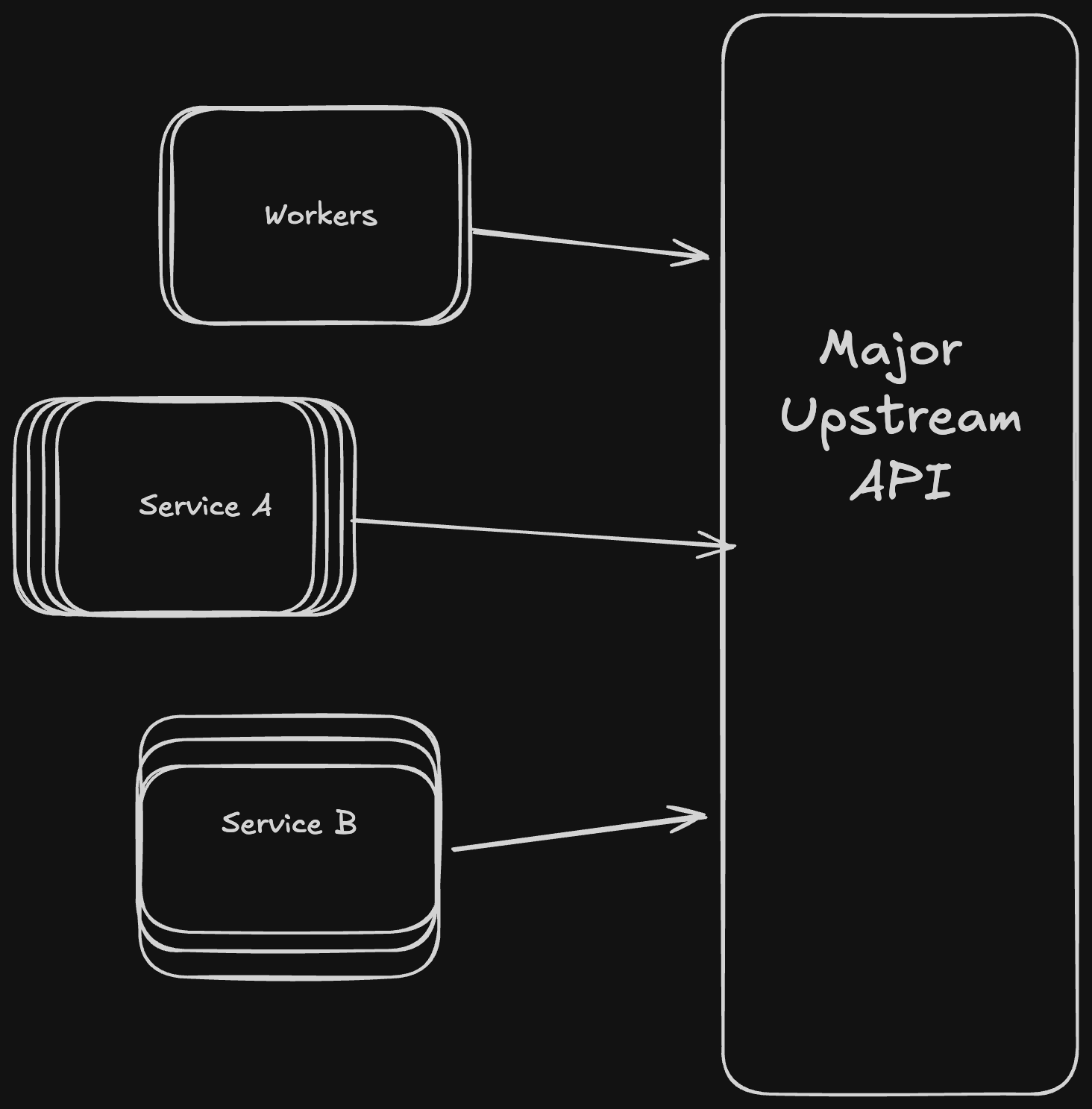

The Problem Nobody Really Talks About

Most systems deal with failure in isolation.

Each service:

- sends a request

- gets a 429 or timeout

- retries on its own schedule

- hopes for the best

This works... until it doesn't.

At scale, independent retries turn into retry storms:

- rate limits get hammered

- upstreams get slower

- outages cascade

- tail latency explodes

The real issue isn't that APIs fail. It's that every client is blind to what every other client is experiencing.

Failure isn't shared state.

The Simple Idea That Changed Everything

EZThrottle is built around one opinionated idea:

Retries shouldn't be independent.

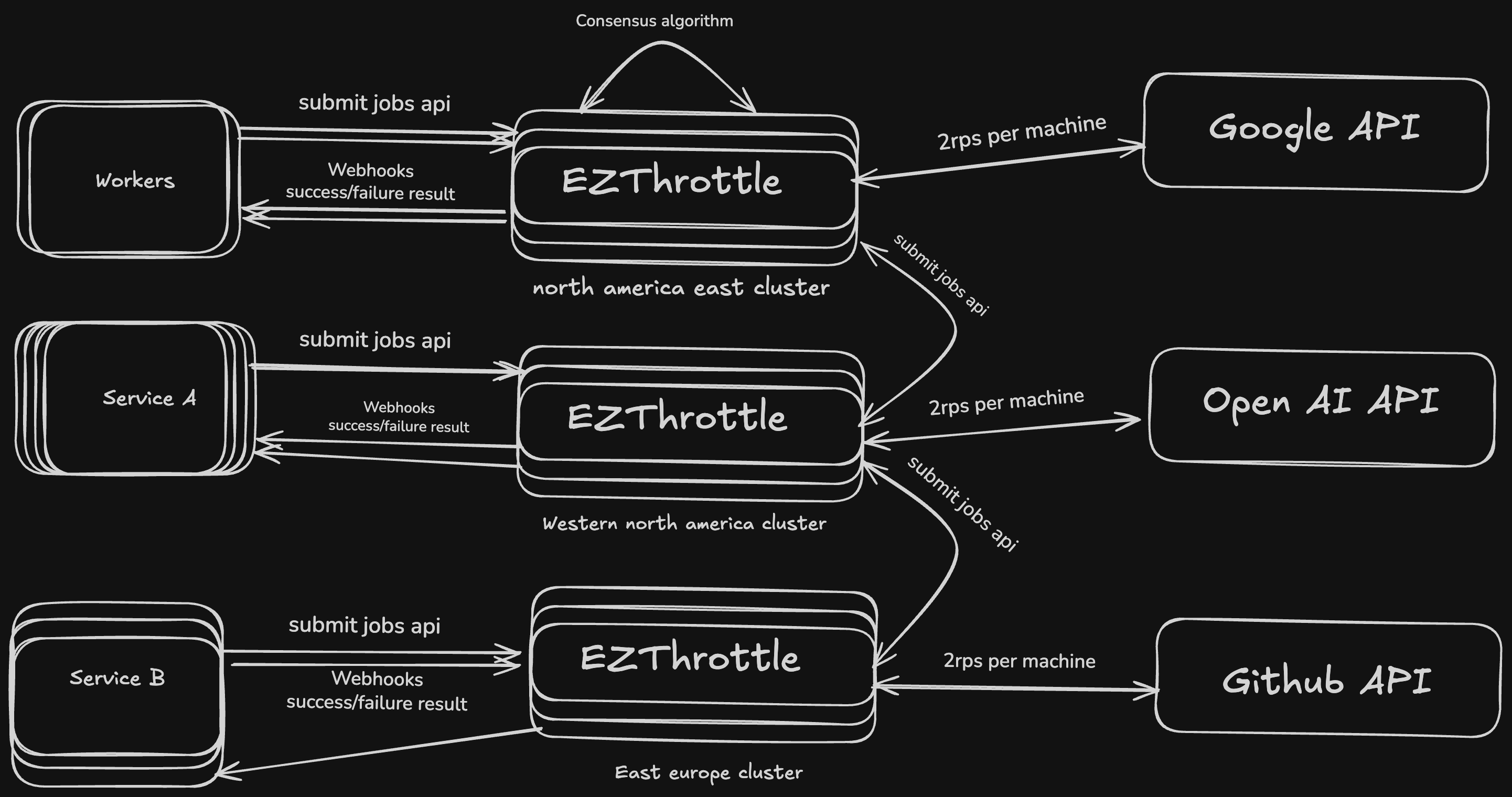

Instead of thousands of machines panicking at once, EZThrottle coordinates failure in one place.

All outbound requests flow through EZThrottle, which keeps track of:

- how fast a destination can actually handle traffic

- which regions are healthy

- when it's time to slow down or reroute

Once failure becomes shared state, it stops being chaos.

Why This Runs on the BEAM (and Why That Matters)

EZThrottle is written in Gleam and runs on Erlang/OTP.

That choice wasn't about trends—it was about survival.

The BEAM was designed for:

- massive concurrency

- message passing instead of shared memory

- processes that fail without taking everything else down

- distributed coordination that doesn't fall apart under load

EZThrottle isn't trying to make one HTTP call fast. It's trying to coordinate millions of them safely. This is exactly what the BEAM is good at.

429s Aren't Errors — They're Signals

A 429 isn't your API yelling at you. It's your API asking you to slow down.

Most systems ignore that signal and keep retrying anyway.

EZThrottle takes the hint.

The boring default (on purpose)

By default, EZThrottle sends 2 requests per second per target domain.

Not globally. Not per account. Per destination.

Examples:

api.stripe.com→ 2 RPSapi.openai.com→ 2 RPSapi.anthropic.com→ 2 RPS

This default exists to prevent your infrastructure from accidentally turning into a distributed denial-of-service attack.

It smooths bursts, stops retry storms, and keeps upstreams healthy.

Yes, it's conservative. That's the point.

How Rate Limits Work

EZThrottle enforces rate limits automatically. Clients don't control the rate — the service maintainer does.

If you're building an API and want to tell EZThrottle how fast you can handle traffic, respond with these headers:

X-EZTHROTTLE-RPS: 5

X-EZTHROTTLE-MAX-CONCURRENT: 10EZThrottle reads these and adjusts. No client configuration needed — rate limiting happens at the infrastructure layer.

The important part isn't the knobs. It's this:

Rate limiting becomes shared state instead of a thousand sleep() calls.

When Things Actually Break (5xx and Outages)

Regions go down. Configs break. Dependencies flake out.

EZThrottle assumes this will happen.

When a request fails with a 5xx or times out:

- that region is marked as unhealthy

- traffic is rerouted to healthy regions

- optionally, requests are raced across regions

The result isn't "everything is perfect."

The result is: a small latency bump instead of a full outage.

Region Racing: Let the Fastest One Win

Sometimes you don't want to wait. You just want the request to finish.

EZThrottle supports region racing:

- send the same request to multiple regions

- accept the first success

- cancel the rest

Here's what that looks like:

from ezthrottle import EZThrottle, Step, StepType

client = EZThrottle(api_key="your_api_key")

result = (

Step(client)

.url("https://api.example.com/endpoint")

.method("POST")

.type(StepType.PERFORMANCE)

.regions(["iad", "lax", "ord"])

.execution_mode("race")

.webhooks([{"url": "https://your-app.com/webhook"}])

.execute()

)This isn't about chasing microbenchmarks. It's about predictable completion when the world is messy.

FRUGAL vs PERFORMANCE (How Teams Actually Use This)

Not every request needs maximum reliability.

EZThrottle supports two common patterns:

- FRUGAL: Try locally first. Forward only when things go wrong.

- PERFORMANCE: Always run through EZThrottle for distributed reliability.

Here's a FRUGAL example:

from ezthrottle import EZThrottle, Step, StepType

client = EZThrottle(api_key="your_api_key")

result = (

Step(client)

.url("https://api.example.com/endpoint")

.type(StepType.FRUGAL)

.fallback_on_error([429, 500, 502, 503])

.webhooks([{"url": "https://your-app.com/webhook"}])

.execute()

)This lets teams start cheap and gradually move reliability into infrastructure instead of application code.

The Tradeoff EZThrottle Makes (On Purpose)

EZThrottle prioritizes:

- predictable completion

- bounded failure

- coordinated recovery

Over:

- maximum burst throughput

- raw, unbounded speed

If you want every request to fire as fast as possible, EZThrottle isn't for you.

If you want to stop waking up to retry storms, it probably is.

What EZThrottle Is (and Isn't)

EZThrottle is:

- a reliable HTTP transport

- a coordination layer for outbound requests

- a way to make failure boring again

EZThrottle is not:

- a database

- a general compute platform

- a replacement for business logic

Requests live in memory, move through the system, and disappear. There's nothing to mine, leak, or hoard.

Why This Matters

Most infrastructure complexity exists to compensate for unreliable networks.

EZThrottle doesn't eliminate failure.

It eliminates panic.

By turning retries, rate limits, and region health into shared state, it gives you something rare in distributed systems:

Predictable behavior when things go wrong.